In a data-driven economy, the ability to build, manage, and scale reliable data infrastructure is not a competitive advantage—it's a fundamental requirement. From powering AI-driven customer experiences in SaaS to ensuring regulatory compliance in fintech, the quality of your data engineering directly dictates your capacity for growth and innovation.

Ineffective practices lead to brittle pipelines, unreliable analytics, and soaring operational costs. This article cuts through the noise to deliver the top 10 data engineering best practices that modern technology leaders and their teams must master. We move beyond theory to provide actionable frameworks, real-world examples, and expert insights you can apply immediately, whether you're building an MVP development with a startup or modernizing enterprise systems.

These principles are the blueprint for transforming raw data into tangible business value, ensuring your infrastructure is not just functional but a catalyst for scalability, efficiency, and ROI. You will learn to implement robust systems for:

- Data Pipeline Orchestration: Building resilient, automated data workflows.

- Data Quality and Validation: Ensuring data accuracy and trustworthiness.

- Infrastructure as Code (IaC): Managing data systems with version-controlled code.

- Modular Data Transformation: Using frameworks like dbt for clean, reusable logic.

- Modern Data Architecture: Designing effective data warehouses and lakehouses.

- Real-time Data Streaming: Processing data as it’s generated for immediate insights.

- Data Governance and Security: Implementing policies for data access, privacy, and compliance.

- Cost and Performance Optimization: Controlling cloud spend and maximizing efficiency.

- Data Observability and Monitoring: Gaining deep visibility into your data ecosystem’s health.

This guide provides the critical knowledge needed to build a data foundation that supports sustained business success.

1. Data Pipeline Orchestration and Workflow Management

Modern data ecosystems are not linear; they are complex webs of interdependent tasks that ingest, transform, and deliver data. Effective data engineering best practices demand a centralized system to manage this complexity. Data pipeline orchestration is the practice of automating, scheduling, and monitoring complex data workflows from end to end, ensuring tasks run in the correct order, dependencies are met, and failures are handled gracefully.

Why it matters: Without orchestration, teams rely on fragile cron jobs and manual interventions, leading to data quality issues, missed SLAs, and operational chaos. Platforms like Apache Airflow, Dagster, and Prefect allow engineers to define workflows as code, creating Directed Acyclic Graphs (DAGs) that turn convoluted scripts into manageable, observable, and repeatable processes.

Actionable Implementation Tips

- Start Small and Iterate: Begin by orchestrating a single, well-understood pipeline. Gradually add more complexity as your team gains confidence with the tool.

- Embrace Idempotency: Design tasks to be idempotent, meaning they can be re-run multiple times without changing the final result. This is crucial for safe and simple failure recovery.

- Containerize Tasks: Use Docker to containerize your pipeline tasks. This ensures consistency between development, testing, and production environments, eliminating "it works on my machine" issues. The principles behind a robust CI/CD pipeline are highly relevant here; reviewing continuous deployment best practices provides a valuable framework for building resilient automated systems.

- Prioritize Monitoring and Alerting: Implement comprehensive monitoring from day one. Set up alerts for pipeline failures, long-running tasks, and SLA breaches to proactively manage pipeline health.

2. Data Quality and Validation Frameworks

Data is the lifeblood of modern business, but its value is directly tied to its quality. Poor-quality data leads to flawed analytics, broken machine learning models, and poor business decisions. Therefore, a core tenet of data engineering best practices is implementing robust data quality and validation frameworks. This practice involves systematically checking data for accuracy, completeness, consistency, and timeliness at every stage of the pipeline.

Why it matters: This proactive approach turns data quality from a reactive cleanup effort into a foundational, automated part of the data lifecycle. Tools like Great Expectations, dbt tests, and Soda allow engineers to define data quality rules as code, creating "data contracts" that integrate directly into CI/CD workflows. For example, a financial services firm can automatically validate that transaction records have a non-null ID, preventing corrupt data from contaminating downstream reports.

Actionable Implementation Tips

- Start with Critical Assets: Don't try to boil the ocean. Begin by applying data quality checks to your most critical datasets—those feeding key business reports or customer-facing applications.

- Integrate Validation into CI/CD: Embed data quality tests directly into your CI/CD pipeline. A

dbt testorgreat_expectations checkpoint runshould be a mandatory step before merging code, preventing quality regressions from ever reaching production. - Establish Tiered Alerting: Not all data quality issues are equally urgent. Configure alerts to differentiate between warnings (e.g., a minor shift in data distribution) and critical failures (e.g., a high percentage of null values in a primary key).

- Define and Monitor Data SLAs: Document clear Service Level Agreements (SLAs) for data quality, freshness, and availability for each key dataset. Use monitoring tools to track these metrics and provide visibility to all stakeholders.

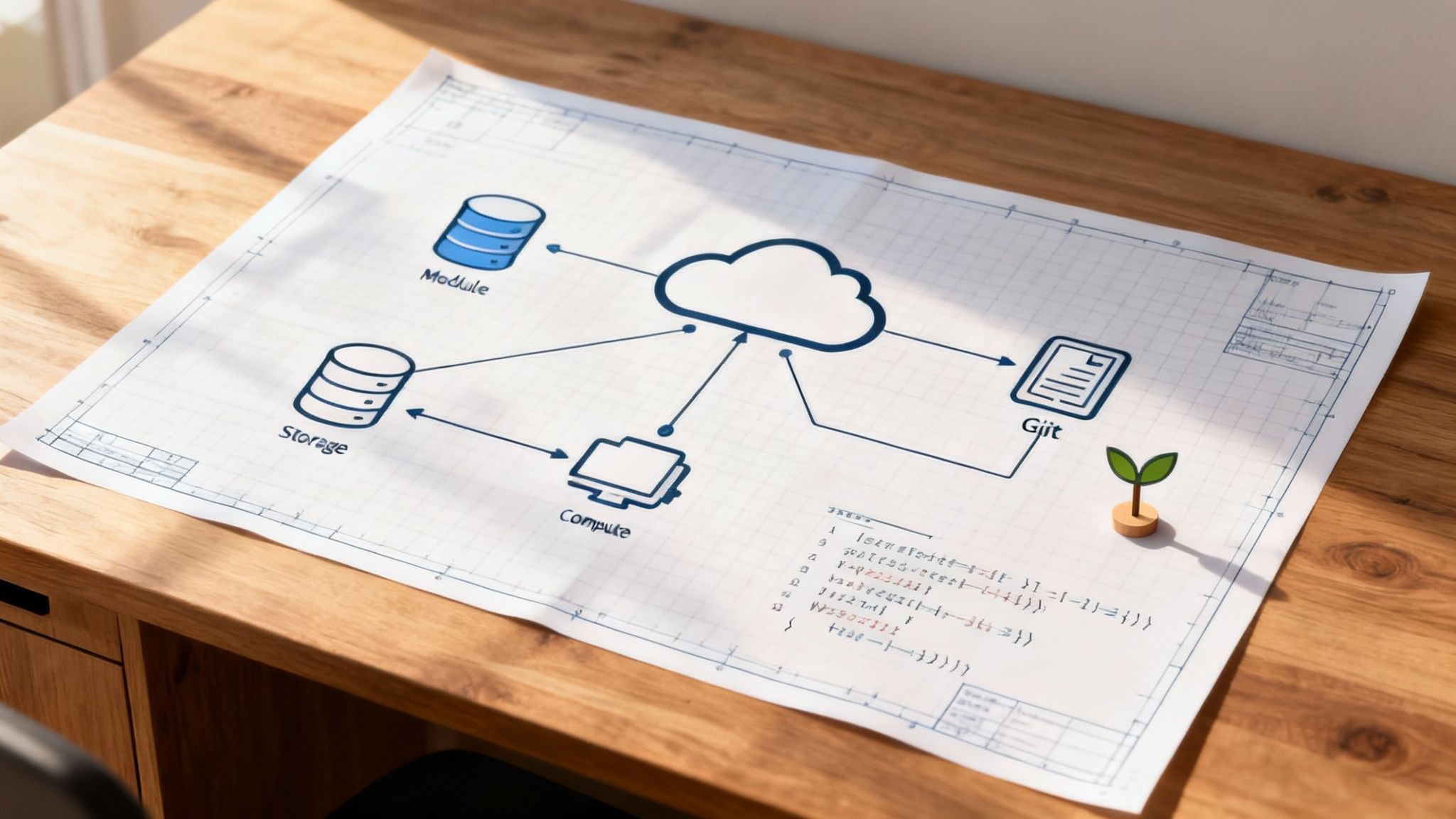

3. Infrastructure as Code (IaC) for Data Systems

Manually configuring data infrastructure is a recipe for inconsistency, drift, and operational errors. The best practice is to treat your infrastructure—databases, warehouses, cloud services, and clusters—as you would your application code. Infrastructure as Code (IaC) is the practice of managing and provisioning infrastructure through machine-readable definition files, using tools like Terraform or AWS CloudFormation.

Why it matters: IaC brings the same rigor of software development—versioning, testing, and peer review—to your infrastructure. A SaaS company can define its entire Snowflake data warehouse setup in a Terraform file. This file is version-controlled in Git, enabling teams to propose changes via pull requests, validate them in a CI/CD pipeline, and apply them with confidence. This eliminates configuration drift and makes disaster recovery a predictable, automated process.

Actionable Implementation Tips

- Use Separate State Files per Environment: Maintain distinct state files (e.g., in Terraform) for development, staging, and production. This isolates environments and prevents accidental changes in production.

- Version Control Everything: Commit all IaC files to a Git repository. This creates an auditable history of every infrastructure change, which is crucial for compliance and debugging.

- Build Reusable Modules: Create modular, reusable components for common infrastructure patterns, such as a standard data processing cluster or a secure S3 bucket configuration. This accelerates provisioning and enforces organizational standards.

- Implement Change Approval Workflows: Protect your production environment by requiring peer reviews and automated checks for any infrastructure change proposals. This is a core principle in modern DevOps consulting services, ensuring stability and collaboration.

4. Modular Data Transformation and dbt Framework

Raw data is rarely ready for analysis; it requires cleaning, joining, and aggregating. A core data engineering best practice is to manage these transformations with the same rigor as application code. The dbt (data build tool) framework enables this by bringing software engineering principles like modularity, version control, and testing directly to the analytics workflow.

Why it matters: This "analytics engineering" approach replaces brittle, monolithic ETL scripts with a transparent, testable, and maintainable codebase for analytics. By treating transformations as code stored in a Git repository, teams at companies like Spotify and GitLab can collaborate effectively, deploy changes through CI/CD pipelines, and automatically generate documentation. Dbt intelligently manages dependencies between models, ensuring transformations run in the correct sequence.

Actionable Implementation Tips

- Organize Models into Logical Layers: Structure your dbt project with clear layers, such as

staging(for light cleaning),intermediate(for complex business logic), andmarts(for analysis-ready datasets). This separation of concerns simplifies development and debugging. - Implement Comprehensive Testing: Use dbt's built-in testing capabilities (

unique,not_null) and custom SQL-based tests to validate data integrity and catch quality issues before they impact downstream dashboards. - Document Assumptions and Calculations: Leverage dbt's YAML configuration to document every model and column. This documentation is version-controlled and can be automatically rendered into a searchable website, creating a single source of truth for business logic.

- Use Source Freshness Checks: Configure source freshness tests to monitor the timeliness of your raw data. This allows you to proactively detect and alert on upstream data loading issues, ensuring your transformations are always running on current data.

5. Modern Data Warehouse and Lakehouse Architecture

The foundation of any analytics or machine learning initiative is its data storage layer. A well-designed architecture for your data warehouse or lakehouse is crucial for performance, scalability, and cost control. This practice involves making strategic choices about how data is structured, stored, and accessed to create a highly optimized and queryable asset.

Why it matters: This architectural shift is a cornerstone of modern data engineering best practices, enabling data-driven decisions at scale. Modern cloud platforms like Snowflake, Google BigQuery, and Databricks have redefined this space, offering serverless, massively parallel processing that separates storage from compute. This allows organizations to scale resources independently, paying only for what they use. For example, a fintech platform can migrate from a costly on-premise cluster to Snowflake, drastically reducing operational overhead and improving query speeds.

Actionable Implementation Tips

- Design Schemas for Query Patterns: Instead of strictly adhering to traditional normalization, design schemas to optimize for common analytical queries. This often involves denormalization to create wide tables that reduce complex joins and improve performance.

- Implement Strategic Partitioning and Clustering: Partition large tables based on frequently filtered columns, such as date or region. This prunes the amount of data scanned in a query, leading to faster results and lower costs.

- Leverage Native Semi-Structured Data Support: Modern warehouses excel at handling JSON and Parquet. Instead of flattening this data during ingestion, load it into a VARIANT-type column and query it directly using SQL extensions. This simplifies pipelines and preserves the original data structure.

- Establish Data Lifecycle Management: Not all data needs to be immediately accessible. Implement retention policies to automatically archive older, less-used data to cheaper storage tiers (e.g., from Snowflake to Amazon S3). This practice is critical for managing storage costs without losing valuable historical information.

6. Real-time Data Streaming and Event-Driven Architecture

In contrast to traditional batch processing, modern data engineering best practices increasingly rely on real-time data streaming. This approach involves processing data as it's generated, enabling immediate insights and actions. Event-driven architecture is the paradigm that powers this, where systems react to "events" like a user click, a financial transaction, or a sensor reading.

Why it matters: Without real-time capabilities, businesses make decisions based on outdated information, losing critical competitive advantages. Technologies like Apache Kafka and AWS Kinesis are cornerstones of this practice. This model is essential for use cases like fraud detection in fintech, real-time inventory management in e-commerce, and live monitoring of IoT devices.

Actionable Implementation Tips

- Implement a Schema Registry: Use a schema registry like Confluent Schema Registry to enforce data contracts between producers and consumers. This prevents data quality issues by ensuring that all events conform to a predefined structure.

- Design for Idempotent Consumers: Build consumer applications to be idempotent. This ensures that processing the same event multiple times (a common occurrence in distributed systems) does not lead to incorrect results or duplicate data.

- Monitor Consumer Lag: Closely monitor "consumer lag"—the delay between when a message is produced and when it is consumed. High lag is an early indicator that your consumers cannot keep up with data volume, potentially leading to data staleness.

- Use Dead-Letter Queues (DLQs): Implement a dead-letter queue strategy to handle messages that cannot be processed successfully. Instead of halting the pipeline, poison pill messages are rerouted to a separate queue for later analysis, ensuring the main data flow remains uninterrupted.

7. Data Governance and Metadata Management

In a scalable data architecture, data is a product, and it requires rules, ownership, and a clear catalog. Data governance is the framework of policies and processes for managing data assets, while metadata management provides the technical foundation to discover, understand, and trust that data. This practice is crucial for transforming a chaotic "data swamp" into a reliable, secure, and well-documented data warehouse.

Why it matters: Without robust governance, organizations face significant risks, including compliance violations, poor decision-making based on untrusted data, and duplicated engineering efforts. Tools like Apache Atlas and Collibra automate metadata collection, track data lineage from source to dashboard, and provide a central catalog for all stakeholders. For instance, financial institutions leverage these tools to enforce strict regulatory compliance.

Actionable Implementation Tips

- Start with Critical Data Assets: Identify your most critical data domains (e.g., customer, financial, product) and build your governance framework around them first, then expand.

- Automate Metadata Collection: Manually documenting data is unsustainable. Configure your tools to automatically scan and ingest metadata from your data sources, warehouses, and BI platforms to keep your catalog current.

- Establish Clear Ownership and Policies: Assign clear data owners and stewards for each key data asset. Work with them to define access control policies, quality rules, and usage guidelines. For a more in-depth look, understanding the principles of data lifecycle management provides a comprehensive framework for this process.

- Implement Data Lineage Tracking: Use lineage features to visualize the flow of data across your ecosystem. This is invaluable for impact analysis, root cause analysis, and building trust among data consumers.

8. Data Security, Privacy, and Encryption

In the modern data landscape, security is not an afterthought; it is a fundamental requirement woven into every data pipeline. Effective data engineering best practices mandate robust security measures to protect sensitive information, maintain customer trust, and meet stringent regulatory requirements like GDPR and CCPA. This involves implementing a multi-layered defense strategy that includes encryption, granular access controls, and data anonymization.

Why it matters: Failing to prioritize security can lead to catastrophic data breaches, severe financial penalties, and irreparable reputational damage. This practice is non-negotiable for organizations handling personally identifiable information (PII) or financial records. The goal is a secure-by-design architecture where security is applied at every stage, from ingestion to consumption.

Actionable Implementation Tips

- Encrypt Everything by Default: Implement encryption for all sensitive data stores (at rest) and network communications (in transit). For a detailed comparison of common cryptographic methods, understanding the differences between AES and RSA encryption can help you make informed architectural decisions.

- Implement Least-Privilege Access: Adopt Role-Based Access Control (RBAC) to ensure users and systems can only access the specific data they need to perform their duties. This minimizes the attack surface and limits the potential impact of a compromised account.

- Leverage Managed Key Services: Use managed services like AWS Key Management Service (KMS) or Google Cloud KMS to handle the creation, storage, and rotation of cryptographic keys. These services offload complex security operations, reducing the risk of human error.

- Conduct Regular Security Audits: Proactively identify and remediate vulnerabilities by performing regular security audits, penetration testing, and code reviews. Implement comprehensive audit logging to track data access and system changes.

9. Cost Optimization and Resource Management

As data volumes and processing demands escalate, cloud infrastructure costs can quickly spiral out of control. A core tenet of modern data engineering best practices is the proactive management of these expenses. Cost optimization is the strategic practice of continuously monitoring, analyzing, and adjusting data infrastructure to achieve the best performance at the lowest possible price.

Why it matters: Without a focus on cost, a high-performing data platform can become a financial liability. This practice involves a disciplined approach to resource allocation, using tools like AWS Cost Explorer and CloudHealth to identify idle resources, inefficient queries, and oversized clusters. Companies have reduced their BigQuery bills by over 40% simply by rewriting inefficient SQL, showcasing the direct ROI of this practice.

Actionable Implementation Tips

- Implement Aggressive Right-Sizing: Continuously monitor resource utilization (CPU, memory, storage) and downsize over-provisioned servers, clusters, and databases. Use cloud provider tools to analyze historical usage patterns.

- Leverage Spot and Reserved Instances: For non-critical, fault-tolerant workloads like batch ETL jobs, use spot instances to achieve savings of up to 90%. For predictable workloads, purchase reserved instances or savings plans to lock in significant discounts.

- Optimize Expensive Queries: Use query execution plans and cost analysis tools to identify and rewrite inefficient queries. Focus on reducing data scanned by implementing partitioning, clustering, and materialized views.

- Establish Data Lifecycle Policies: Implement automated policies to move cold or infrequently accessed data from expensive, high-performance storage (hot storage) to cheaper, archival tiers like Amazon S3 Glacier or Google Cloud Coldline Storage.

10. Data Observability and Monitoring

Data systems are dynamic entities where freshness, quality, and pipeline health can degrade silently. Data observability extends beyond traditional monitoring by providing a holistic, end-to-end view of the data lifecycle. It involves collecting logs, metrics, and traces to understand the "why" behind system behavior, not just the "what." This practice is a cornerstone of modern data engineering best practices.

Why it matters: Observability enables teams to proactively detect, diagnose, and resolve data issues before they impact business intelligence reports or customers. Platforms like Monte Carlo Data and Datadog treat data health as a first-class citizen. For instance, a fintech company can use observability to detect a sudden drop in transaction volume, which could indicate an upstream API failure. Without it, such issues might go unnoticed for days.

Actionable Implementation Tips

- Define Data SLAs, SLOs, and SLIs: Establish clear Service Level Agreements (SLAs), Objectives (SLOs), and Indicators (SLIs) for key datasets. These metrics, such as freshness and completeness, create a baseline for what constitutes "good" data.

- Implement Layered Alerting: Create a tiered alerting strategy based on issue severity. A minor schema drift might trigger a low-priority Slack notification, while a critical data freshness SLA breach should page the on-call engineer.

- Correlate Data and Pipeline Health: Use tools that can correlate data quality incidents with pipeline performance metrics. Tying a data anomaly back to a specific code deployment or infrastructure change dramatically shortens root cause analysis time.

- Adopt Distributed Tracing: For complex, multi-stage pipelines, implement distributed tracing to visualize the entire data flow. This helps pinpoint bottlenecks and failure points. A critical aspect of maintaining healthy data systems is implementing comprehensive observability best practices to gain deep insights into system performance and data flow.

Data Engineering Best Practices — 10-Point Comparison

| Solution | Implementation complexity | Resource requirements | Expected outcomes | Ideal use cases | Key advantages |

|---|---|---|---|---|---|

| Data Pipeline Orchestration and Workflow Management | Medium–High: DAG design and scheduler setup | Orchestration platform, schedulers, monitoring, compute | Reliable, repeatable pipelines with automated scheduling | Scalable ETL, complex dependency management, batch workflows | Dependency tracking, retries, monitoring, team collaboration |

| Data Quality and Validation Frameworks | Medium: rule definition and integration effort | Validation tools, profiling compute, ongoing maintenance | Early detection/prevention of bad data; higher trust in analytics | Compliance-sensitive domains, critical data assets, ML pipelines | Automated checks, anomaly detection, compliance support |

| Infrastructure as Code (IaC) for Data Systems | Medium: learning IaC and managing state | IaC tools, version control, CI/CD, cloud credentials | Reproducible, consistent environments and faster provisioning | Multi-environment provisioning, disaster recovery, infra reproducibility | Reduced config drift, auditable changes, rapid environment spin-up |

| Modular Data Transformation and dbt Framework | Low–Medium: SQL-centric modeling and testing | dbt, data warehouse compute, version control, CI/CD | Testable, documented, and maintainable transformation layer | Analytics engineering, warehouse-centric transformations | SQL-first modularity, built-in tests, auto-docs, CI support |

| Data Warehouse and Lake Architecture | Medium–High: schema design and migration planning | Cloud storage/compute, query engines, governance tooling | Scalable storage/querying and cost-effective analytics at scale | Large-scale analytics, mixed batch/interactive queries | Elastic scaling, separation of storage/compute, faster analytics |

| Real-time Data Streaming and Event-Driven Architecture | High: distributed systems and stream semantics | Brokers (Kafka/Kinesis), stream processors, ops expertise | Low-latency processing and immediate reaction to events | Fraud detection, real-time analytics, event-driven apps | Real-time insights, system decoupling, complex event processing |

| Data Governance and Metadata Management | High: policy design and organizational adoption | Catalog tools, lineage integrations, stewardship resources | Improved discoverability, lineage visibility, regulatory compliance | Regulated industries, large enterprises, multi-source environments | Compliance enablement, impact analysis, centralized metadata |

| Data Security, Privacy, and Encryption | High: encryption, key management, and access controls | KMS, RBAC systems, masking/tokenization tools, audits | Protected sensitive data and adherence to privacy/regulatory rules | Fintech, healthcare, any systems handling PII/PCI | Risk reduction, regulatory compliance, secure data sharing |

| Cost Optimization and Resource Management | Medium: continuous monitoring and policy tuning | FinOps tooling, cost dashboards, monitoring, engineering time | Lower cloud spend and improved resource utilization | Startups on tight budgets, scaling enterprises, cost-sensitive apps | Significant cost savings, better forecasting, efficient scaling |

| Data Observability and Monitoring | Medium: instrumentation, SLOs, and alerting setup | Observability tools, metrics/log storage, alerting systems | Proactive anomaly detection, reduced MTTR, SLA visibility | Mission-critical pipelines, SLA-driven operations, analytics platforms | Early detection, root-cause insights, performance monitoring |

From Principles to Production: Your Next Steps

We've covered ten foundational pillars, from the disciplined orchestration of data pipelines to the strategic imperatives of data governance and observability. Each of these data engineering best practices represents a strategic lever for building a data ecosystem that is not only functional but also resilient, scalable, and value-driven. The real challenge lies in weaving these principles into your daily operations.

Adopting a "data as a product" mentality is paramount. This means treating your data platforms, pipelines, and assets with the same rigor and user-centric focus as a customer-facing application. It requires moving from ad-hoc, reactive fixes to a proactive, systematic approach to building and maintaining your data infrastructure.

Synthesizing Best Practices into a Cohesive Strategy

The power of these principles is magnified when they are implemented as a cohesive whole. Combining Infrastructure as Code with modular dbt transformations creates a highly repeatable and auditable analytics engineering workflow. Layering data observability on top provides immediate insight into performance, cost, and data quality, turning your data stack into a transparent, well-oiled machine. This synergy is what separates a fragile data infrastructure from an enterprise-grade strategic asset.

Your immediate next steps should be pragmatic and impact-oriented. Perform a gap analysis of your current state against the best practices outlined here.

Actionable Next Steps:

- Prioritize by Business Impact: Identify which practice will solve your most pressing problem. Is data quality eroding user trust? Start with a validation framework. Are cloud costs spiraling? Focus on cost optimization.

- Start with a Pilot Project: Select a single, high-visibility data pipeline to serve as your pilot. Use this project to implement a few key practices, like IaC for its resources or dbt for its transformations. This creates a blueprint for success and builds momentum.

- Establish a Center of Excellence: A small group dedicated to championing these data engineering best practices can have an outsized impact. This team can create templates, document standards, and provide guidance to other engineers, ensuring consistency and accelerating adoption.

The Ultimate Goal: Turning Data into a Competitive Advantage

Mastering these concepts is about transforming your data function from a cost center into a strategic enabler of business growth. A well-architected data platform empowers your organization to innovate faster, from launching AI-driven products to delivering personalized customer experiences. It provides the reliable foundation needed for modern fintech platforms, secure enterprise operations, and scalable SaaS products. By embedding these practices into your culture, you build not just robust data pipelines, but a durable competitive advantage that will fuel your business for years to come.

Building and scaling a world-class data engineering function requires specialized expertise. Group 107 provides elite, dedicated offshore data engineers who seamlessly integrate with your team to implement these best practices with precision and efficiency. If you're ready to accelerate your data maturity and build the infrastructure to power your future, connect with us at Group 107 to see how we can help.