Estimating software development time isn't about predicting the future; it's a strategic process of deconstructing complex goals into manageable tasks and forecasting the effort required. The secret is that estimation is not a one-time event. It's an iterative process that you refine as the project evolves, ensuring budgets, resources, and stakeholder expectations remain aligned. For any business, from a SaaS startup launching an MVP to an enterprise finance firm building a new fintech platform, this process is the bedrock of project success.

Why Accurate Development Estimates Matter

A precise software development estimate dictates your budget, resource allocation, and go-to-market strategy. When leadership has a realistic timeline, they can make informed decisions on marketing campaigns, revenue forecasting, and strategic investments. Get it wrong, and the consequences cascade quickly. Projects that miss deadlines almost inevitably exceed their budgets—a potentially fatal blow for startups and a significant drag on ROI for established enterprises.

The Real-World Consequences of Miscalculation

The challenge of accurately estimating software projects is a long-standing industry problem. The Standish Group's landmark 1994 CHAOS Report revealed that only 16% of software projects were completed on time and on budget. A staggering 50% were significantly underestimated, and nearly a third were canceled entirely. Even "successful" projects had average schedule overruns of 120%. While the industry has improved, the core challenge remains. You can explore more insights on the history of the estimation process to understand the evolution of these challenges.

Inaccurate estimates also erode team morale. Nothing burns out skilled developers faster than the relentless pressure of an impossible deadline, leading to stress, compromised code quality, and high employee turnover. When corners are cut to meet unrealistic timelines, technical debt accumulates, costing far more in time and resources to resolve later. This is why a core component of our web development process at Group107 is establishing a realistic plan from day one.

An accurate estimate isn’t just a number; it’s a commitment to transparency and predictability. It provides a clear project roadmap, mitigates scope creep by defining clear boundaries, and fosters a productive environment for the development team.

Viewing estimation as a strategic tool transforms it from a guessing game into a driver of business value, ensuring you hit market windows and achieve a tangible return on investment.

Core Estimation Strategies: Top-Down vs. Bottom-Up

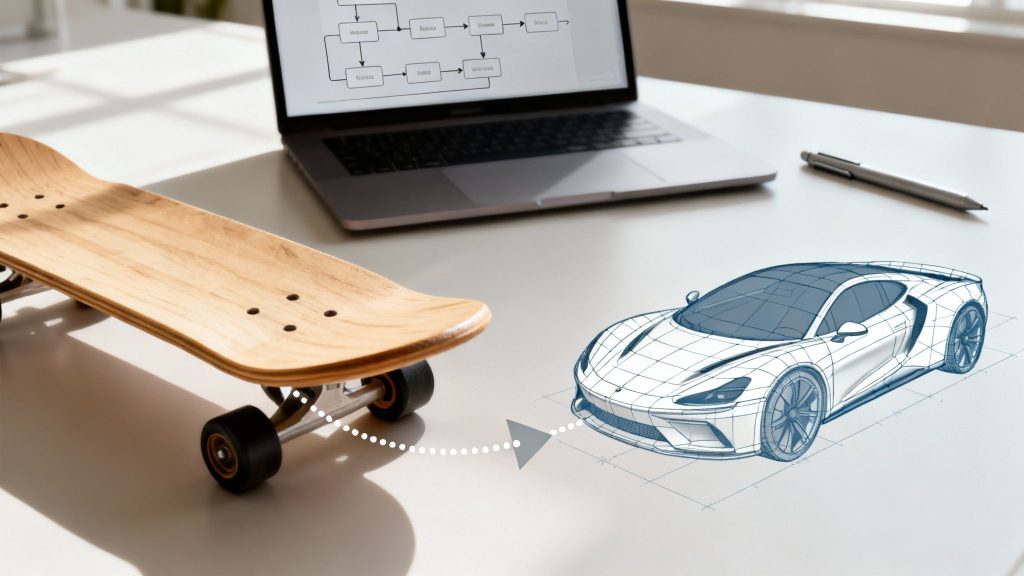

Choosing the right estimation strategy is a critical decision that depends on the project's lifecycle stage. An early-stage budget proposal requires a different approach than a detailed sprint planning session. This is where the fundamental distinction between Top-Down and Bottom-Up estimation becomes vital. Top-down is the telescope for viewing the big picture; bottom-up is the microscope for examining the fine details.

As this illustrates, the path to a successful outcome is narrow. A robust estimation process is your guide to staying on track and avoiding the "Over Budget" or "Failed" statuses that plague poorly planned initiatives.

Top-Down Estimation: The 30,000-Foot View

When you need a rapid, high-level forecast with limited details, top-down estimation is the ideal tool. It's perfect for initial feasibility studies, budget allocation, and strategic planning. You're assessing the overall scale of the project before diving into granular task analysis.

Two common top-down methods are:

- Analogous Estimation: This technique leverages experience from similar past projects. For example, "This new user authentication module is architecturally similar to one we built for another client, which took approximately 80 hours. We can use that as our baseline." It’s fast and requires minimal detail, but its accuracy is entirely dependent on the relevance of the historical comparison.

- Parametric Estimation: This is a more quantitative approach that uses historical data and key project variables to model the estimate. For instance, if historical data from your DevOps pipeline shows that setting up and testing one new microservice takes an average of 10 hours, a project requiring 20 new microservices yields a parametric estimate of 200 hours.

These methods provide the speed needed for early-stage decision-making but lack the precision required for detailed execution planning.

Bottom-Up Estimation: Getting Into The Weeds

Once a project is approved and you need a detailed execution plan, you must shift to bottom-up techniques. This approach involves deconstructing the entire project into its smallest component tasks, estimating each piece individually, and aggregating the results. While more time-intensive upfront, the resulting accuracy is indispensable for managing sprints and controlling costs.

Work Breakdown Structure (WBS)

A WBS is a hierarchical decomposition of the total project scope. You begin with a major feature, such as an "E-commerce Checkout Process," and break it down into smaller, actionable work packages.

For example, the checkout process could be broken down into:

- Develop user cart summary component (display items, quantities, prices).

- Implement shipping information form with address validation API.

- Integrate Stripe payment gateway for transaction processing.

- Build order confirmation page and trigger transactional email.

This transforms a vague objective into a concrete list of tasks that the team can accurately estimate.

Three-Point Estimation (PERT)

This technique explicitly addresses the inherent uncertainty in software development by using a range of estimates for each task.

- Optimistic (O): The best-case scenario, assuming no impediments.

- Pessimistic (P): The worst-case scenario, where significant roadblocks are encountered.

- Most Likely (M): The most realistic estimate based on typical conditions.

These values are then used in a weighted average formula: (O + 4M + P) / 6. This method forces a realistic consideration of risk and builds a data-informed buffer directly into the estimate.

The true value of bottom-up estimation lies in the detailed conversations it forces. When a developer must think through every implementation step, they are more likely to identify hidden dependencies, legacy code challenges, or API limitations—complexities that high-level estimates would completely miss.

Of course, the technology landscape constantly evolves. The rise of low-code automation platforms can dramatically accelerate certain tasks, and this must be factored into any modern estimation model.

Comparison of Key Estimation Techniques

To help you select the right method, here’s a summary of the most common techniques. Expert project managers often blend these approaches based on the project phase and available information.

| Technique | Best For | Pros | Cons |

|---|---|---|---|

| Analogous | Early-stage, high-level estimates when data is limited. | Fast and simple. Requires minimal project detail. | Only as accurate as the historical comparison. Highly subjective. |

| Parametric | Projects with repetitive tasks and good historical data. | More objective than analogous. Scalable for large projects. | Requires reliable historical data to be accurate. |

| Three-Point (PERT) | High-risk tasks or projects with significant uncertainty. | Accounts for risk and uncertainty. Provides a realistic range. | Can be more time-consuming to gather three estimates for every task. |

| Work Breakdown (WBS) | Detailed planning, sprint execution, and accurate cost tracking. | Highly accurate and detailed. Improves team understanding. | Time-consuming and requires a well-defined project scope. |

Ultimately, there is no single "best" method. The key is to match the estimation technique to the strategic need—using a top-down approach for the initial business case and a detailed bottom-up analysis for execution.

Using Agile Methods for Dynamic Estimates

In today's fast-paced development environments, traditional, rigid estimation methods often fail. Agile projects require a more fluid, collaborative approach to forecasting that shifts the focus from precise hours to relative effort and team capacity. This change in perspective is crucial for enabling teams to deliver consistent value sprint after sprint.

Agile estimation techniques like Story Points and Planning Poker are designed to embrace uncertainty. They produce estimates that are grounded in the team's collective experience and foster a powerful sense of shared ownership. This approach is built on continuous learning and using empirical data to forecast future work. For a deeper dive into the framework, explore our complete guide to the agile methodology in the software development lifecycle.

Demystifying Story Points

Instead of asking, "How many hours will this take?" agile teams ask, "How large is this task relative to other tasks?" This is the essence of Story Points, an abstract unit of measure that consolidates three key factors:

- Complexity: How difficult is the implementation? Does it involve new technology or complex business logic?

- Uncertainty: How much is unknown? Are the requirements clear, or is there a need for research and discovery?

- Effort: How much work is involved? Is it a minor UI adjustment or a major database refactoring?

Story Points are typically assigned using a modified Fibonacci sequence (1, 2, 3, 5, 8, 13…). This non-linear scale reflects the reality that complexity and uncertainty grow exponentially, not linearly. An 8-point task is not merely twice the effort of a hypothetical 4-point task; it represents a significant leap in all three factors. The key benefit is that relative sizing decouples the estimate from individual developer speed. A 5-point story remains a 5-point story, whether a senior developer completes it in one day or a junior developer takes three.

Running a Planning Poker Session

Planning Poker is a simple yet powerful consensus-based technique for assigning Story Points. It leverages the collective expertise of the entire development team to arrive at a well-vetted estimate.

Here’s a typical workflow for a new feature in a SaaS application:

- The Pitch: The product owner presents the user story, explaining the functionality and its value to the user.

- The Q&A: The development team probes for details, asking about technical implementation, dependencies, and potential risks. This is where ambiguity is clarified.

- The Vote: Each team member privately selects a card representing their estimate of the Story Point value.

- The Reveal: All cards are revealed simultaneously to prevent "anchoring bias," where one person's initial estimate influences others.

- The Debate: If estimates vary significantly (e.g., a 3, a 5, and a 13), the team members with the highest and lowest estimates explain their reasoning. This discussion is invaluable for uncovering hidden complexities or misunderstandings.

- The Re-Vote: The team votes again with this new shared understanding, repeating the process until a consensus is reached.

The primary purpose of Planning Poker is not just to produce a number, but to facilitate a deep, shared understanding of the work. This alignment is what makes the final estimate significantly more reliable.

From Points to Predictability

Once your backlog is estimated in Story Points, you can translate these abstract units into a tangible forecast by measuring your team velocity—the average number of Story Points the team completes per sprint (typically a one- or two-week cycle).

After a few sprints, a reliable pattern emerges. If your team consistently completes around 30 Story Points per sprint, that is your velocity. It's an empirical, data-driven measure of your team's capacity. With this metric, if your project backlog contains 180 Story Points of remaining work, you can forecast with high confidence that it will take approximately six more sprints to complete. This data-driven approach transforms conversations with stakeholders, replacing arbitrary deadlines with realistic, evidence-based forecasts that build trust and credibility.

Building Predictive Accuracy with Historical Data

Your most powerful estimation asset is not a formula or a tool; it's your own project history. Past projects are a rich source of data that can ground your forecasts in reality. The goal is to evolve from subjective guessing to making defensible, data-driven predictions. This requires systematically capturing, analyzing, and leveraging data from completed work to establish reliable benchmarks for future initiatives.

What Metrics to Track for Better Estimates

To make historical data useful, you must track the right metrics consistently. A simple spreadsheet or a dedicated project management tool is sufficient to start.

Focus on metrics that directly correlate with effort and timelines:

- Actual vs. Estimated Time: This is the most critical metric. Track it at a granular level (per feature or user story) to identify patterns. For example, if third-party API integrations consistently take 50% longer than estimated, you have identified a systemic planning gap.

- Team Velocity: For agile teams, tracking the number of story points completed per sprint is essential. It provides a reliable measure of your team's throughput.

- Bug Density: Monitor the number of defects discovered per feature or sprint. A high bug count can indicate underlying complexity that was missed during the initial estimation.

- Scope Change Frequency: Document every change request and its impact on the timeline. This data helps quantify the true cost of scope creep and informs how you budget for it in future projects.

By tracking these metrics, you create a powerful feedback loop. This data can be synthesized into a business intelligence report to uncover trends and improve the accuracy of future planning.

A Real-World Financial Services Example

Imagine a financial services client needs a new regulatory compliance module. The initial scope appears straightforward: capture user data, validate it via a government API, and generate a report. Based on similar data-entry features, the team's initial estimate is 120 hours.

However, a review of historical data from a past project involving secure data handling reveals critical insights. The data shows that any feature touching personally identifiable information (PII) consistently required:

- An additional 25% development time for enhanced encryption and security protocols.

- A 40% longer QA cycle to accommodate penetration testing and vulnerability scans.

- A mandatory architectural review with the security team, which historically added a full week to the timeline.

Factoring in this historical data, the revised estimate becomes 210 hours—a 75% increase. This is no longer a guess; it's a forecast grounded in empirical evidence. It allows the project manager to set realistic stakeholder expectations, allocate the necessary resources, and avoid the predictable delays and budget overruns that the initial, overly optimistic estimate would have caused.

Your historical data is your institutional memory. It prevents you from repeating past mistakes and provides the evidence needed to justify estimates, building critical trust with stakeholders.

Validating Estimates with Industry Benchmarks

While internal data is primary, external industry benchmarks provide a valuable sanity check. QSM's analysis of government and commercial projects, for example, shows how historical trendlines can be used to validate vendor bids and internal forecasts. If your estimates align with industry averages for projects of similar complexity, it builds confidence. If they are a significant outlier, it signals a potential risk that requires further investigation.

This approach is particularly valuable for SaaS, finance, and tech companies, as it provides a data-backed foundation for building credibility and ensuring timelines are realistic from the outset.

How to Manage Uncertainty and Project Risks

No software development estimate is foolproof. Even the most meticulously planned project can be derailed by unforeseen challenges. Therefore, effective risk management is not an optional add-on; it is a core component of a resilient estimation process. The objective is not to eliminate uncertainty but to build a plan that can absorb shocks without collapsing. This requires proactively identifying, assessing, and mitigating potential threats.

Buffers vs. Contingency: A Critical Distinction

To manage uncertainty effectively, you must differentiate between its two primary forms. Teams often lump all "just-in-case" time into a generic buffer, which is a mistake. A more strategic approach separates known variables from true unknowns.

- Buffers are allocated for known, predictable risks. This includes time for integrating with a notoriously unreliable third-party API or accommodating the expected back-and-forth of user acceptance testing. You know these activities will introduce delays, so a buffer proactively accounts for them in the schedule.

- Contingency is reserved for unpredictable, high-impact events. These are the "black swan" risks. What if your lead developer resigns mid-project? What if a critical open-source library is suddenly deprecated? You cannot predict what will happen, but you can plan for the fact that something unexpected likely will.

Separating these two concepts enables clearer communication with stakeholders. A buffer is a calculated part of the planned estimate; contingency is an insurance policy for managing unforeseen crises.

Practical Techniques for Risk Assessment

You cannot mitigate risks you have not identified. Vague concerns are not actionable. You need a structured framework to transform project anxieties into a concrete mitigation plan.

Create a Risk Register

This is a living document—often a simple spreadsheet—that logs every potential threat to the project. For each identified risk, you should document:

- A clear description of the risk.

- Its potential impact on the timeline or budget (e.g., adds 2 weeks, increases cost by $20k).

- The probability of its occurrence (High, Medium, Low).

- A specific mitigation plan.

Use a Probability-Impact Matrix

This matrix helps you prioritize risks by plotting each one on a grid based on its likelihood and potential impact. This visualization immediately clarifies where to focus your mitigation efforts. A low-probability, low-impact risk might be acceptable, but a high-probability, high-impact risk demands an immediate and robust response plan.

A risk matrix forces you to confront your most significant threats head-on. It shifts the team from a reactive, firefighting mode to a proactive, strategic mindset—the only way to keep a complex project on track.

Common Risks in Modern Development Projects

While every project is unique, certain risks are common across different domains. Learning to recognize these early can prevent major disruptions. The well-documented lessons from Rockstar Games' GTA VI development delays highlight how quality, scope, and timelines can become dangerously intertwined on large-scale projects.

Common examples include:

- Web Development: Unexpected browser compatibility issues, scope creep driven by last-minute marketing requests, or performance bottlenecks discovered just before launch.

- DevOps: A misconfigured CI/CD pipeline causing deployment failures, uncontrolled cloud costs from orphaned resources, or a security vulnerability in a base container image forcing a widespread rebuild.

- AI Projects: Poor quality training data leading to an inaccurate model, unforeseen biases in the model creating ethical and legal risks, or the "black box" nature of a model making it difficult to debug unexpected outputs.

Countering the Psychology of Optimism

Finally, you must account for human psychology. Developers are inherently optimists who are paid to solve problems. This "optimism bias" can lead to underestimating complexity and overlooking potential roadblocks.

Structured testing is a powerful antidote. As we cover in our guide to alpha and beta testing strategies, early validation can uncover flawed assumptions and hidden risks long before a public launch.

Techniques like Three-Point Estimation (PERT) also help by forcing the team to explicitly consider not just the "most likely" outcome but also the optimistic and pessimistic scenarios. By building a framework that anticipates and plans for uncertainty, you move from hopeful guessing to creating resilient, realistic project roadmaps.

Summary: Your Blueprint for Actionable Software Estimation

There is no single formula for perfect software estimation. The most successful teams employ a strategic blend of proven techniques, collaborative processes, and proactive risk management. When executed correctly, your timelines evolve from optimistic guesses into reliable forecasts that drive business success.

The key is to use the right tool for the right job. High-level methods like Analogous or Parametric estimation are effective for early-stage budgeting. For detailed sprint planning, bottom-up techniques like a Work Breakdown Structure (WBS) are essential. In dynamic environments, agile methods such as Story Points and Planning Poker provide the flexibility and realism needed to keep projects on track.

Your Next Steps to Improve Estimation

Ready to implement these strategies? Here is an actionable checklist to get started:

- Track Your Actuals vs. Estimates: Begin immediately logging the actual time spent on tasks against their original estimates. This is the foundational step for building useful historical data.

- Conduct a Project Post-Mortem: After your next project concludes, analyze where your estimates were accurate and where they missed. Most importantly, identify the root causes of any variances.

- Pilot a Planning Poker Session: For a small, low-risk feature, gather your team and run a trial Planning Poker session. This is an excellent way to introduce a collaborative estimation culture and uncover hidden complexities.

By taking these steps, you can build a more predictable and successful development process. If you are ready to transform accurate estimation into a competitive advantage, the team at Group107 has the expertise to help you implement these strategies effectively.

Got Questions? We've Got Answers

Even with the best processes, estimating software projects can be challenging. Here are our direct answers to some of the most common questions we encounter.

How Do You Estimate a Project with Brand-New Tech?

When faced with an unfamiliar technology stack, your historical data is irrelevant. The solution is not to guess. Instead, isolate the uncertainty by conducting a research spike—a short, time-boxed task (e.g., one or two days) dedicated solely to experimentation. Build a small proof-of-concept to understand the technology's limitations and complexities. This investment will provide the clarity needed to estimate the actual feature work with confidence.

What Are the Best Tools for Estimation?

While the process is more important than the tool, the right software can streamline your efforts. For agile teams, platforms like Jira and Azure DevOps are excellent for managing backlogs, tracking story points, and measuring velocity. For more traditional projects, a well-organized spreadsheet or a tool like Smartsheet is effective for managing a detailed Work Breakdown Structure and comparing estimated vs. actual hours.

How Should I Talk About Uncertainty with Stakeholders?

Never present a single number as a firm commitment. This sets a false expectation of certainty. Instead, always communicate estimates as a range and be transparent about your confidence level. For example: "Our current forecast for this feature is 6 to 8 weeks. We are highly confident in the core development, but there are unknowns related to the third-party API integration. The two-week buffer accounts for that specific risk." This reframes the conversation from hitting an arbitrary date to managing project risks collaboratively.

What Happens When a Project Goes Over Budget?

First, don't panic. It happens. The key is immediate, clear communication. As soon as you foresee a deviation, investigate the root cause. Was it scope creep? An unexpected technical challenge? An overly optimistic initial estimate? Once you understand the 'why,' you can present stakeholders with clear options: de-scope lower-priority features to meet the original deadline, renegotiate the timeline and budget, or re-prioritize the project roadmap. A proactive, solution-oriented approach is critical.

At Group 107, we transform estimation from a source of anxiety into a strategic advantage. With deep expertise in web development, DevOps, and AI, we build projects on a solid foundation of realistic planning and predictable delivery. Learn how we can help you scale your vision with confidence.