In today’s fast-paced digital landscape, manual infrastructure management is a direct bottleneck to growth, scalability, and security. It’s slow, error-prone, and impossible to replicate consistently. This is where Infrastructure as Code (IaC) transforms operations, turning infrastructure provisioning into a version-controlled, automated, and collaborative software engineering discipline.

For any SaaS, fintech, or enterprise organization, adopting IaC isn’t just a best practice; it’s a fundamental requirement for achieving competitive velocity and operational resilience. The challenges associated with effectively managing SaaS infrastructure highlight the non-negotiable role of automation in maintaining uptime, security, and scalability. This article moves beyond theory, providing 10 tangible, in-depth Infrastructure as Code examples across the most critical tools in the DevOps ecosystem.

We will break down each example, offering strategic analysis and actionable takeaways you can implement to streamline your CI/CD pipelines, enhance security, and drive measurable business impact. Whether you’re modernizing a legacy system or building a cloud-native platform from scratch, these examples provide a blueprint for success. You will see practical applications using tools like Terraform, CloudFormation, Ansible, Pulumi, and Bicep, tailored for real-world scenarios in startups, fintech, and large enterprises. This curated collection is designed to give you replicable strategies for building robust, scalable, and secure systems.

1. Terraform for Multi-Cloud Infrastructure Provisioning

Terraform, an open-source tool from HashiCorp, stands out as a premier example of Infrastructure as Code (IaC) due to its cloud-agnostic nature. It uses a declarative configuration language, HCL (HashiCorp Configuration Language), allowing teams to define their desired infrastructure state across multiple providers like AWS, Azure, and GCP simultaneously. This approach unifies infrastructure management, preventing vendor lock-in and enabling resilient, multi-cloud architectures.

Terraform works by maintaining a state file, which acts as a source of truth for your managed infrastructure. When you apply a configuration, Terraform compares your desired state with the actual state recorded in the file and creates an execution plan to bridge any gaps. This plan-and-apply workflow provides a critical safety check, showing exactly what changes will be made before they are executed.

Strategic Breakdown and Application

Terraform’s core strength lies in its modularity and extensive provider ecosystem. Companies like Airbnb and Slack leverage it to manage complex, scalable infrastructure. For a startup building an MVP, Terraform allows for rapid, repeatable environment creation. For an enterprise, it standardizes deployments across different business units, ensuring consistency and compliance.

Key Tactical Insights:

- Code Reusability: Use Terraform modules to encapsulate common infrastructure patterns, such as a standard VPC setup or a containerized application stack. This reduces code duplication and streamlines maintenance.

- Team Collaboration: Always use a remote state backend like Amazon S3 or Azure Blob Storage. This secures the state file, provides locking to prevent concurrent modifications, and enables seamless collaboration within a DevOps team.

- Automated Validation: Integrate

terraform planinto your CI/CD pipeline. This step automates the review process, allowing engineers to see the impact of their changes in a pull request before merging, which significantly reduces the risk of production errors.

Actionable Takeaway: For any organization adopting a cloud-first strategy, mastering Terraform is essential for efficient and scalable operations. It provides a unified language to manage resources, which is a foundational element of modern DevOps practices and successful cloud adoption strategies. If you’re proficient with Terraform for multi-cloud infrastructure provisioning and looking for career growth, consider exploring relevant remote Terraform job opportunities.

2. CloudFormation for AWS-Native Infrastructure

AWS CloudFormation is a powerful, native Infrastructure as Code (IaC) service that provides a common language for describing and provisioning all the infrastructure resources in your AWS environment. It uses templates written in either YAML or JSON to model a complete infrastructure setup. This approach allows developers and system administrators to manage their AWS architecture as a single unit, called a stack, ensuring dependencies are handled correctly and deployments are predictable.

CloudFormation works by interpreting your template file and making the necessary API calls to AWS services on your behalf to create, update, or delete resources. A key feature is the “change set,” which lets you preview how proposed changes to a stack might impact your running resources before you implement them. This provides a crucial safety mechanism, preventing unexpected disruptions and making it one of the most reliable infrastructure as code examples for AWS-centric organizations.

Strategic Breakdown and Application

CloudFormation’s core advantage is its deep, seamless integration with the entire AWS ecosystem. Companies like Netflix and Adobe leverage it to manage complex, multi-account AWS deployments and automate scalable infrastructure. For a fintech startup building on AWS, it ensures security and compliance by codifying network rules and IAM policies. For an enterprise, it standardizes resource deployment, from simple S3 buckets to complex serverless applications.

Key Tactical Insights:

- Simplify Serverless: Use the AWS Serverless Application Model (SAM), an open-source framework that extends CloudFormation, to define and deploy serverless applications. SAM simplifies the syntax for declaring functions, APIs, and event sources, accelerating development.

- Modularize Complexity: For large-scale systems, break down your architecture into smaller, manageable pieces using nested stacks or the more recent CloudFormation Modules. This promotes code reuse and makes the infrastructure easier to maintain and update.

- Secure Sensitive Data: Avoid hardcoding secrets like database passwords or API keys in templates. Instead, use dynamic references to pull values directly from AWS Systems Manager Parameter Store or AWS Secrets Manager at deployment time.

Actionable Takeaway: For organizations committed to the AWS cloud, mastering CloudFormation is fundamental for achieving operational excellence and automation. It provides a robust, native solution for managing the entire lifecycle of your cloud resources. Adopting CloudFormation is a critical step in building a mature AWS DevOps strategy that ensures consistency, security, and scalability.

3. Ansible for Configuration Management and Orchestration

Ansible, now part of Red Hat, is a powerful open-source automation tool that excels at configuration management, application deployment, and task automation. Unlike other tools that require agents on managed nodes, Ansible uses an agentless architecture, communicating over standard SSH protocols. It uses simple, human-readable YAML for its configuration files, called “playbooks,” making it one of the most approachable yet robust infrastructure as code examples available.

This agentless approach simplifies management, as there’s no software to install or maintain on the target systems, reducing the overall operational overhead. Ansible works by executing tasks defined in playbooks against an inventory of hosts. This makes it ideal for managing the state of existing servers, orchestrating multi-tier application deployments, and performing routine system administration tasks at scale, from patching servers to provisioning software.

Strategic Breakdown and Application

Ansible’s strength lies in its simplicity and versatility, making it a favorite for both startups and large enterprises like Uber and Twitter for infrastructure automation. For a financial institution, Ansible can enforce security baselines and ensure compliance across a fleet of servers. For a SaaS company, it can orchestrate complex, zero-downtime rolling updates for customer-facing applications, ensuring service continuity and reliability.

Key Tactical Insights:

- Code Reusability: Structure your automation using Ansible Roles. Roles are a way of organizing playbooks and other files into self-contained, reusable components, such as a role for deploying a web server or configuring a database.

- Dynamic Environments: Use dynamic inventory scripts to automatically pull host information from cloud providers like AWS or Azure. This ensures your inventory is always up-to-date without manual intervention, which is critical in elastic cloud environments.

- Idempotent Operations: Leverage handlers to trigger service restarts only when a configuration file has actually changed. This idempotent principle prevents unnecessary service disruptions and ensures playbooks can be run multiple times with the same predictable outcome.

Actionable Takeaway: For organizations needing to automate the configuration and lifecycle of existing infrastructure, Ansible provides a low-barrier-to-entry solution. It is a cornerstone of modern IT automation and a key part of embracing the core principles of DevOps services for streamlined operations. Integrating Ansible into your CI/CD pipeline for application deployment and post-provisioning setup is a highly effective strategy.

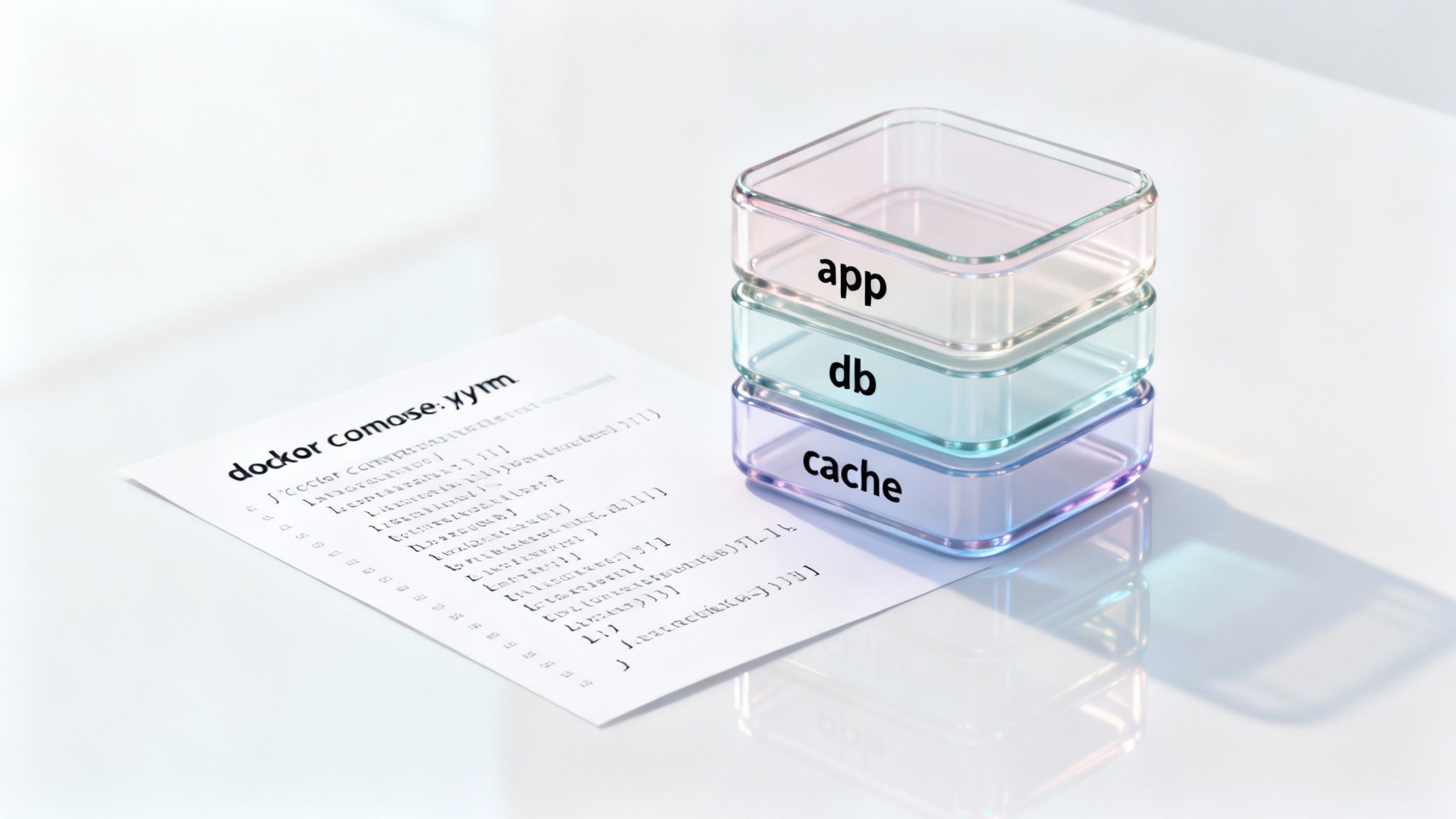

4. Docker & Docker Compose for Containerized Infrastructure

Docker revolutionized software development by packaging applications and their dependencies into standardized units called containers. This approach, a core infrastructure as code example, uses a Dockerfile to codify the exact environment an application needs to run. Docker Compose extends this by using a declarative YAML file to define and orchestrate multi-container applications, making it simple to manage complex services like an app, database, and cache as a single unit.

This container-centric model ensures that an application runs identically and reliably regardless of the underlying host environment. It bridges the gap between development and operations by creating a consistent, portable artifact that can be deployed anywhere from a developer’s laptop to a production cluster. This consistency drastically reduces “it works on my machine” issues and streamlines the entire delivery pipeline.

Strategic Breakdown and Application

Docker’s primary strength is creating immutable, self-contained application environments. Companies like Spotify and PayPal leverage it to manage microservices and containerize legacy applications, improving deployment speed and isolation. For a startup, Docker Compose allows developers to spin up a full-stack local development environment with a single command. For an enterprise, it standardizes application packaging, simplifying security scanning and compliance.

Key Tactical Insights:

- Optimized Image Builds: Use multi-stage builds in your

Dockerfile. This technique allows you to use one container for building and compiling (with all its dependencies) and a separate, much smaller container for the final runtime, significantly reducing image size and attack surface. - Secure and Efficient Context: Implement a

.dockerignorefile to exclude unnecessary files like logs, local development configurations, and build artifacts from the build context. This prevents sensitive data leaks and speeds up the image build process. - Container Health Monitoring: Define health checks within your

Dockerfileordocker-compose.yml. This allows the Docker engine to monitor the internal state of your application, ensuring traffic is only routed to healthy containers and enabling automated recovery in orchestration platforms.

Actionable Takeaway: Adopting a containerization strategy with Docker is fundamental for modern application development and deployment. It serves as the foundational layer for microservices, serverless architectures, and advanced DevOps automation. If you’re building containerized solutions and seeking to scale your team, consider engaging with a dedicated offshore development team proficient in these modern practices.

5. Kubernetes Manifests for Container Orchestration

Kubernetes has become the de facto standard for container orchestration, and at its core are declarative YAML manifests. These files are a powerful form of Infrastructure as Code, allowing teams to define the desired state of their containerized applications, including pods, services, deployments, and storage. Instead of manually running commands, engineers describe the “what” in a manifest, and the Kubernetes control plane works relentlessly to make the cluster’s actual state match that description. This declarative model provides self-healing, automated scaling, and predictable, version-controlled application deployments.

This approach to managing containerized infrastructure is proven at immense scale by companies like Google, which runs billions of containers a week, and by tech leaders like Pinterest, who use it to manage their complex microservices architecture. The manifest-driven workflow ensures that every component of an application is defined as code, making environments easy to replicate, audit, and manage.

Strategic Breakdown and Application

Kubernetes’s true power is unlocked when its declarative manifests are treated as first-class citizens in a GitOps workflow. By storing manifests in a Git repository, teams gain a single source of truth for their application’s entire lifecycle. This model is ideal for fintech companies requiring strict audit trails and for enterprise platforms modernizing their legacy applications with microservices. For a SaaS startup, it provides a scalable foundation that grows with the user base without constant manual intervention.

Key Tactical Insights:

- Templating and Management: Avoid writing raw YAML for every environment. Use tools like Helm to package and manage application charts or Kustomize to apply environment-specific overlays to a base configuration. This dramatically reduces duplication and simplifies configuration management.

- Security and Resource Control: Explicitly define resource requests and limits in your manifests to ensure fair resource scheduling and prevent noisy neighbor problems. Implement Pod Security Standards (or the deprecated PodSecurityPolicy) to enforce security best practices at the cluster level.

- Configuration Decoupling: Use ConfigMaps for non-sensitive configuration data and Secrets for sensitive credentials like API keys or database passwords. This separates configuration from your application image, making your deployments more portable and secure.

Actionable Takeaway: For organizations committed to microservices and cloud-native architectures, mastering Kubernetes manifests is non-negotiable. It provides a robust, declarative framework for managing complex distributed systems. Integrating these manifests into an automated process is a key part of an effective CI/CD pipeline strategy, enabling rapid, reliable, and secure software delivery at scale.

6. Pulumi for Programmatic Infrastructure Code

Pulumi offers a distinct approach to Infrastructure as Code by empowering developers to use familiar, general-purpose programming languages like Python, TypeScript, Go, and C# instead of a domain-specific language. This paradigm shift transforms infrastructure management into a software engineering discipline, allowing teams to leverage loops, functions, classes, and robust testing frameworks directly in their IaC definitions. By treating infrastructure as software, Pulumi enables higher levels of abstraction and logic that are difficult to achieve with purely declarative tools.

The platform works by translating code written in a standard language into a desired state for cloud resources. During deployment, the Pulumi engine compares this desired state with the actual infrastructure state, which it tracks in a state backend, and then calculates and executes the necessary API calls to align them. This provides the predictability of declarative tools combined with the expressive power of imperative programming, offering one of the most flexible infrastructure as code examples available today.

Strategic Breakdown and Application

Pulumi’s core advantage is its appeal to development teams already proficient in languages like Python or Go. Companies like Databricks and even AWS have used Pulumi to automate and streamline their complex cloud infrastructure management. For a tech startup, this means developers can define application infrastructure using the same language as the application itself, unifying the development lifecycle. For an enterprise, it enables the creation of powerful, reusable infrastructure components with embedded business logic and policy enforcement.

Key Tactical Insights:

- Create Reusable Components: Use classes and functions in your chosen language to build powerful, reusable “component resources.” For example, a single Python class could encapsulate an entire serverless application stack, including Lambda functions, API Gateway, and DynamoDB tables, which can be instantiated with just a few lines of code.

- Leverage Full Testing Capabilities: Write unit and integration tests for your infrastructure code using standard testing frameworks like Pytest or Jest. This allows you to validate logic, check configurations, and mock cloud provider interactions before ever deploying, dramatically improving reliability.

- Utilize the Automation API: For advanced use cases, embed the Pulumi engine directly into your applications using the Automation API. This enables dynamic, programmatic infrastructure management, such as spinning up temporary environments for pull request previews or building self-service infrastructure platforms for internal teams.

Actionable Takeaway: For organizations aiming to bridge the gap between development and operations, Pulumi provides a common language and toolset. It empowers software engineers to take full ownership of their infrastructure, which is a key tenet of modern DevOps culture and is critical for building efficient CI/CD automation pipelines.

7. Helm Charts for Kubernetes Package Management

Helm, often called the package manager for Kubernetes, is a critical tool in the cloud-native ecosystem and a prime example of application-level Infrastructure as Code. It streamlines the management of complex Kubernetes applications using “Charts,” which are pre-packaged sets of resource definitions. These Charts use templating to allow for dynamic configuration, making it simple to install, upgrade, and share even the most intricate applications with a single command.

Helm works by packaging YAML manifests into a versioned archive called a Chart. This Chart defines the application’s components, dependencies, and configurable values. When you deploy a Chart, Helm’s template engine generates the final Kubernetes manifests based on your supplied configurations and applies them to the cluster. This process standardizes application deployments, making them repeatable and far less error-prone than managing raw YAML files.

Strategic Breakdown and Application

Helm’s true power lies in its ability to manage the entire lifecycle of a Kubernetes application as a single, cohesive unit. This abstraction is invaluable for DevOps teams. For instance, the Bitnami community maintains over 200 production-ready charts for popular software, allowing teams to deploy complex tools like a Prometheus monitoring stack or an NGINX Ingress Controller in minutes. This dramatically accelerates development and operational workflows.

Key Tactical Insights:

- Environment Customization: Use

values.yamlfiles to abstract environment-specific configurations. Maintain separate value files for development, staging, and production to promote a consistent application structure across all stages of the CI/CD pipeline. - Complex Application Dependencies: Leverage subcharts to manage dependencies. A web application chart, for example, can include a database chart as a dependency, ensuring all required components are deployed and configured together as a single logical unit.

- Automated Chart Validation: Integrate

helm lintand chart-testing tools into your CI/CD process. This ensures that every chart pushed to your repository is syntactically correct and adheres to best practices, preventing malformed deployments before they reach a cluster.

Actionable Takeaway: For organizations leveraging Kubernetes, adopting Helm is non-negotiable for achieving operational maturity and scalability. It provides a standardized framework for application packaging and deployment, which is a cornerstone of effective DevOps practices and modern software delivery. If you’re building a scalable cloud-native platform and need to streamline your Kubernetes workflows, exploring a managed DevOps solution can accelerate your adoption of tools like Helm.

8. Bicep for Azure Resource Manager Templates

Bicep is a domain-specific language (DSL) developed by Microsoft to simplify the authoring of Azure Resource Manager (ARM) templates. It acts as a transparent abstraction over ARM JSON, providing a much cleaner, more intuitive, and human-readable syntax. When you deploy a Bicep file, it is transpiled into a standard ARM JSON template, allowing you to leverage the full power and reliability of the underlying Azure Resource Manager platform with a superior authoring experience.

This approach provides a first-class IaC solution for teams committed to the Azure ecosystem. Instead of wrestling with complex JSON syntax, engineers can define cloud resources using a concise, declarative language that supports modularity, type safety, and automatic dependency management. This makes Bicep one of the most effective infrastructure as code examples for Azure-native development, reducing complexity and accelerating deployment cycles.

Strategic Breakdown and Application

Bicep’s primary advantage is its seamless integration with the Azure platform, including Azure CLI, PowerShell, and Azure DevOps. This native support ensures day-one availability for new Azure services and features. For enterprises standardizing on Azure, Bicep provides a robust framework for creating reusable, auditable, and compliant infrastructure blueprints. For instance, a financial institution can use Bicep to define a secure landing zone that all new projects must inherit.

Key Tactical Insights:

- Modular Design: Structure your infrastructure using Bicep modules. A module is a self-contained Bicep file that can be referenced from other files, allowing you to build complex environments from smaller, reusable components like a virtual network or a Kubernetes cluster.

- Symbolic References: Use symbolic names to reference resources instead of manual string concatenation (e.g.,

resourceId()). This makes your code cleaner, less error-prone, and easier to read and maintain, as Bicep automatically infers dependencies. - Integrated Tooling: Leverage the Bicep extension for Visual Studio Code, which provides rich IntelliSense, code validation, and a linter (

bicep lint). This tooling catches common errors before deployment, enforcing best practices and improving code quality.

Actionable Takeaway: If your organization operates primarily within the Azure cloud, adopting Bicep is a strategic move to enhance productivity and reduce management overhead. It streamlines ARM template development, making it an essential tool for any team practicing modern DevOps and CI/CD methodologies on Azure.

9. Chef for Infrastructure Automation and Configuration

Chef is a powerful configuration management tool that automates how infrastructure is configured, deployed, and managed across a network. As a classic example of Infrastructure as Code, it uses a Ruby-based domain-specific language (DSL) to create “recipes” and “cookbooks” that define the desired state of servers. A central Chef server stores these cookbooks, and agents installed on each managed node (the “Chef Infra Client”) pull their configurations to ensure they remain compliant.

This pull-based model is highly effective for managing large, complex, and heterogeneous server environments. It ensures consistency and automates repetitive tasks like software installation, patch management, and security policy enforcement. By treating configuration as code, Chef enables versioning, testing, and continuous deployment of infrastructure changes, a core tenet of modern DevOps practices.

Strategic Breakdown and Application

Chef’s strength lies in its procedural approach, giving engineers granular control over the step-by-step process of server configuration. This is particularly valuable in enterprise environments with strict compliance and security requirements. Companies like Facebook and Bloomberg have famously used Chef to manage infrastructure at a massive scale, automating everything from bare-metal provisioning to application deployment.

Key Tactical Insights:

- Local Development and Testing: Use Test Kitchen to develop and test cookbooks in an isolated, local environment. This enables rapid iteration and validation before deploying changes to production systems, significantly reducing the risk of configuration errors.

- Compliance as Code: Implement InSpec, Chef’s open-source testing framework, to write automated tests that verify compliance with security and policy requirements. This turns compliance checks into a continuous, automated part of your CI/CD pipeline.

- Secrets Management: Securely manage sensitive data like passwords, API keys, and certificates using Chef’s encrypted data bags. This prevents hardcoding secrets in your cookbooks and provides a secure mechanism for distributing them to nodes.

Actionable Takeaway: For organizations managing large fleets of servers or operating in highly regulated industries, Chef provides the procedural control and robust testing framework needed for reliable automation. Adopting Chef is a strategic move to enforce consistency and compliance at scale. If you are exploring how to modernize your IT operations, understanding these kinds of DevOps solutions is a critical first step.

10. Vagrant for Development Environment Provisioning

Vagrant, another tool from the HashiCorp ecosystem, extends Infrastructure as Code principles directly to local development environments. It provides a simple, elegant workflow for managing and provisioning lightweight, reproducible virtual machines. Using a declarative Vagrantfile, developers can define their application’s required operating system, software dependencies, and network configurations, ensuring that everyone on the team works from an identical, production-parity environment.

This tool works by using “providers” like VirtualBox, VMware, or even Docker to run the virtualized environment and “provisioners” like shell scripts, Ansible, or Puppet to install and configure software. By codifying the setup process, Vagrant eliminates the classic “it works on my machine” problem, dramatically reducing onboarding time for new developers and simplifying the debugging process by removing environmental drift.

Strategic Breakdown and Application

Vagrant’s primary strategic value is in standardizing pre-production environments to mirror production as closely as possible. Companies like Mozilla have used it to provide developers with a consistent environment for building and testing Firefox. For enterprise teams, Vagrant can enforce a standard development stack, ensuring all developers use the same versions of databases, interpreters, and system libraries, which is critical for compliance and quality control.

Key Tactical Insights:

- Standardized Box Distribution: Use Vagrant Cloud to host and distribute pre-configured base boxes for your organization. This ensures every developer starts from a vetted, secure, and standardized image.

- Complex Provisioning with Ansible: For applications with complex dependencies, combine Vagrant with an Ansible provisioner. This allows you to leverage powerful Ansible playbooks to configure the guest machine, keeping your

Vagrantfileclean and your setup logic modular. - Microservices Development: Leverage Vagrant’s multi-machine feature to define and run an entire microservices architecture on your local machine. Each service can run in its own VM, but they can all be managed and networked together from a single

Vagrantfile.

Actionable Takeaway: Implementing Vagrant is a high-impact, low-effort way to introduce Infrastructure as Code practices into your development workflow. It bridges the gap between development and operations, forming a foundational step in building a robust DevOps culture. By codifying the development environment, you reduce configuration errors and accelerate the entire development lifecycle.

Top 10 Infrastructure-as-Code Tools Comparison

| Tool | Implementation complexity | Resource requirements | Expected outcomes | Ideal use cases | Key advantages |

|---|---|---|---|---|---|

| Terraform for Multi-Cloud Infrastructure Provisioning | Moderate to high — HCL and state concepts; advanced features add complexity | CLI, provider credentials, remote state backend recommended | Declarative, versionable multi-cloud infrastructure with tracked state | Provisioning cloud infra, multi-cloud DR, scaling on demand | Provider-agnostic; modular; strong community; readable syntax |

| CloudFormation for AWS-Native Infrastructure | Moderate — JSON/YAML templates can be verbose; AWS-specific semantics | AWS account and IAM; no extra tooling required | AWS stacks with native integrations, change sets and drift detection | AWS-only organizations, serverless apps, compliance-driven deployments | Deep AWS integration; built-in governance and IAM support |

| Ansible for Configuration Management and Orchestration | Low to moderate — YAML playbooks; agentless model is simple to start | Control node + SSH access to targets; Python on targets | Idempotent configuration, application deployments, orchestration across hosts | Multi-platform config, legacy systems, app deployments | Agentless; easy to learn; minimal infra requirements; versatile modules |

| Docker & Docker Compose for Containerized Infrastructure | Low — container workflows and Compose YAML are straightforward | Host with Docker daemon; modest compute; image registry for distribution | Reproducible containerized environments; multi-container apps (single-host) | Dev-to-prod parity, microservices, CI/CD pipelines | Fast, lightweight, consistent environments; large ecosystem |

| Kubernetes Manifests for Container Orchestration | High — complex manifests and operational overhead; cluster management required | Kubernetes cluster (control plane + nodes), monitoring and storage | Highly available, self-healing, autoscaled container orchestration across clusters | Large-scale microservices, multi-cloud container deployments, stateless scaling | Industry-standard orchestration; powerful scaling and ecosystem |

| Pulumi for Programmatic Infrastructure Code | Moderate to high — requires programming language knowledge and tooling | Language runtimes (Python/Go/JS/.NET), CLI, state backend | Programmatic, testable infra with component abstractions and multi-cloud support | Complex dynamic infra, developer-led provisioning, shared logic across clouds | Use familiar languages; richer abstractions; strong testing and reuse |

| Helm Charts for Kubernetes Package Management | Moderate — templating and chart structure add complexity for advanced charts | Kubernetes cluster; Helm client and chart repositories | Packaged, versioned Kubernetes applications with install/rollback | Distributing and managing k8s apps, multi-environment deployments | Simplifies manifest management; dependency handling and rollbacks |

| Bicep for Azure Resource Manager Templates | Low to moderate — simpler than raw ARM JSON; Azure-focused | Azure subscription, Azure CLI/VS tooling | Readable declarative Azure templates compiled to ARM JSON | Azure-native provisioning, enterprise Azure deployments, governance | Cleaner syntax than ARM; full Azure resource support; strong typing |

| Chef for Infrastructure Automation and Configuration | High — agent-based with Ruby DSL; operational overhead | Chef Server or hosted service, agents on nodes, Ruby runtime | Centralized configuration, tested cookbooks, compliant infrastructure | Enterprise multi-platform configuration, compliance-driven automation | Powerful DSL and abstractions; enterprise-grade testing and management |

| Vagrant for Development Environment Provisioning | Low — Vagrantfile patterns are straightforward for developers | Hypervisor (VirtualBox, Hyper-V), local compute for VMs, Vagrant CLI | Reproducible local virtual machine environments for development | Developer onboarding, reproducing prod-like environments locally | Easy dev environment consistency; works with multiple providers |

Your Next Step: Implementing IaC for Tangible Business Results

Throughout this guide, we have journeyed through a comprehensive suite of infrastructure as code examples, demonstrating the power and versatility of modern DevOps tooling. From Terraform’s declarative syntax for multi-cloud deployments to Ansible’s procedural approach for configuration management, each example illuminates a core principle: codifying infrastructure is no longer a luxury, but a strategic imperative for any organization aiming for agility, reliability, and security.

We’ve seen how CloudFormation and Bicep provide deep, native integration for AWS and Azure ecosystems, respectively, while Pulumi empowers developers to use familiar programming languages. Similarly, Kubernetes manifests and Helm charts have shown us how to manage complex, containerized workloads with precision and repeatability. The common thread connecting these diverse infrastructure as code examples is the transformation of manual, error-prone tasks into automated, version-controlled, and collaborative workflows. This shift is not merely technical; it’s a fundamental business enabler that accelerates time-to-market, reduces operational overhead, and fortifies your security posture.

Distilling Strategy from Syntax: Key Takeaways

The true value of IaC lies not just in writing scripts, but in the strategic outcomes it unlocks. The examples provided were designed to move beyond simple “hello world” scenarios and highlight replicable patterns that deliver tangible business results.

Here are the most critical takeaways to internalize:

- Modularity is Your Scalability Engine: As demonstrated in the Terraform and Pulumi examples, breaking down infrastructure into reusable, composable modules is paramount. This approach drastically reduces code duplication, simplifies maintenance, and allows teams to build complex systems from standardized, pre-approved building blocks.

- CI/CD Integration is Non-Negotiable: IaC realizes its full potential when integrated into a CI/CD pipeline. Automating the testing (linting, static analysis) and deployment of infrastructure changes ensures consistency, catches errors early, and creates a fast, reliable path from code commit to production-ready infrastructure.

- Security is a “Shift-Left” Activity: By defining security policies, firewall rules, and IAM roles directly in code, you embed security into the development lifecycle. This makes security posture auditable, version-controlled, and transparent, moving it from a reactive gatekeeper function to a proactive, collaborative effort.

- Declarative vs. Imperative is a Strategic Choice: Understanding the difference is key. Declarative tools (like Terraform, CloudFormation, Kubernetes) focus on the desired end state, letting the tool figure out the how. Imperative tools (like Ansible, Chef) define the specific steps to reach the end state. Your choice depends on the task at hand; provisioning is often best served by declarative models, while complex configuration management can benefit from an imperative approach.

Your Actionable Roadmap to IaC Adoption

Moving from theory to practice requires a deliberate, phased approach. Instead of attempting a “big bang” migration, focus on incremental wins that build momentum and demonstrate value quickly.

Follow these steps to begin your implementation journey:

- Identify the Highest-Impact Bottleneck: Start by auditing your current processes. Where does your team spend the most manual effort? Is it provisioning developer environments, deploying a new microservice, or updating security group rules? Target this area for your first IaC pilot project.

- Select the Right Tool for the Job: Referencing the infrastructure as code examples in this article, choose a tool that fits your team’s existing skill set and your technology stack. If you’re a Python shop building on AWS, Pulumi might be a natural fit. If your goal is multi-cloud consistency, Terraform is the industry standard.

- Implement a Non-Critical Pilot: Select a small, low-risk workload, such as a staging environment for a single application or a monitoring stack. The goal is to learn the tool’s workflow, establish initial best practices for your team (like repository structure and naming conventions), and achieve a measurable win without risking production stability.

- Document and Socialize Your Success: Once the pilot is complete, document the process, the code, and the outcomes. Quantify the benefits: “We reduced new environment setup time from 4 hours to 15 minutes.” Share this success with other teams to build organizational buy-in for broader adoption.

By following this roadmap, you can systematically replace fragile, manual processes with a robust, automated foundation, freeing your engineers to focus on delivering value to your customers.

Ready to move beyond examples and implement a world-class IaC strategy that drives real business outcomes? Group 107 specializes in DevOps transformation, helping enterprises and startups alike build secure, scalable, and automated infrastructure. Our experts can accelerate your adoption of these advanced practices, ensuring your platform is a competitive advantage, not an operational burden.

Explore our DevOps as a Service offerings to build your foundation for growth.